The opportunity

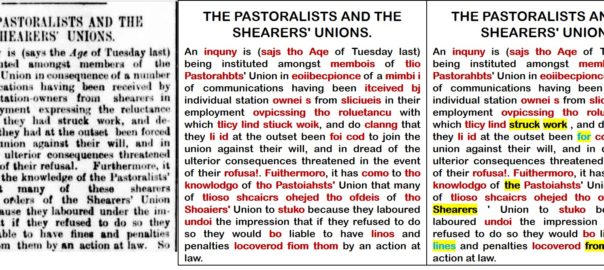

As documented elsewhere on this blog, I recently spent four years of my life playing with computational methods for analysing text, hoping to advance, in some small way, the use of such methods within social science. Along the way, I became interested in using topic models and related techniques to assist the development of public policy. Governments regularly invite public comment on things like policy proposals, impact assessments, and inquiries into controversial issues. Sometimes, the public’s response can be overwhelming, flooding a government department or parliamentary office with hundreds or thousands of submissions, all of which the government is obliged to somehow ‘consider’.

Not having been directly at the receiving end of this process, I’m not entirely sure how the teams responsible go about ‘considering’ thousands of public submissions. But this task strikes me as an excellent use-case for computational techniques that, with minimal supervision, can reveal thematic structures within large collections of texts. I’m not suggesting that we can delegate to computers the task of reading public submissions: that would be wrong even if it were possible. What we can do, however, is use computers to assist the process of navigating, interpreting and organising an overwhelming number of submissions.

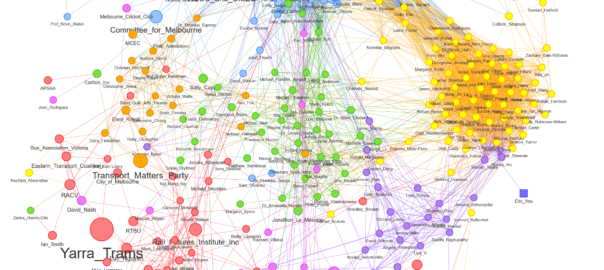

A few years back, I helped a panellist on the Northern Territory’s Scientific Inquiry into Hydraulic Fracturing to analyse concerns about social impacts expressed in more than 600 public submissions. Rather than manually reading every submission to see which ones were relevant, I used a computational technique called probabilistic topic modelling to automatically index the submissions according to the topics they discussed. I was then able to focus my attention on those submissions that discussed social impacts, making the job a whole lot easier than it otherwise would have been. In addition, the topic model helped me to categorise the submissions according to the types of social impacts they discussed, and provided a direct measurement of how much attention each type of impact had received.

This experience proved that computational text analysis methods can indeed be useful for assessing public input to policy processes. However, it was far from perfect case study, as I was operating only on the periphery of the assessment process. The value of computational methods could be even greater if they were incorporated into the process from the outset. In that case, for example, I could have indexed the submissions against topics besides social impacts. As well as making life easier for the panellists responsible for other topics, a more complete topical index would have enabled an easy analysis of which issues were of most interest to each category of stakeholder, or to all submitters taken together.

In this post, I want to illustrate how topic modelling and other computational text analysis methods can contribute to the assessment of public submissions to policy issues. I do this by performing a high-level analysis of submissions to the Victorian parliament about a proposal to expand Melbourne’s ‘free tram zone’. I chose this particular inquiry because it has not yet concluded (submissions have closed, but the report is not due until December) and because it received more than 400 hundred submissions, which although perhaps not an overwhelming number, is surely enough to create a sense of foreboding in the person who has to read them all.

This analysis is meant to be demonstrative rather than definitive. The methods I’ve used are experimental and could be refined. More importantly, these methods are not supposed to stand on their own, but rather should be integrated into the rest of the analytical process, which obviously I am not doing, since I do not work for the Victorian Government. In other words, my aim here is not to provide an authoritative take on the content of the submissions, but to demonstrate how certain computational methods could assist the task of analysing these submissions. Continue reading Free as in trams: using text analytics to analyse public submissions